Learn How AI Ensures Fairness and Equality

By reading this article, you will learn:

– How bias impacts fairness and equality in AI decisions.

– The metrics and measures used to assess fairness and equality in AI decisions.

– Techniques and strategies employed to ensure fairness and equality in AI decisions.

How does the AI software ensure fairness and equality in its decisions? Artificial Intelligence (AI) has become increasingly pervasive in decision-making processes across various industries. From automating routine tasks to making complex choices, AI plays a pivotal role in modern-day operations. As AI continues to evolve, a crucial aspect that demands attention is ensuring fairness and equality in its decision-making. This article delves into the mechanisms through which AI software addresses biases and safeguards fairness and equality in its decisions.

Understanding Bias in AI

Definition and Types of Bias in AI

Bias in AI refers to the systematic and unfair preferences or prejudices that can influence the decisions made by AI systems. There are various types of bias, including selection bias, confirmation bias, and algorithmic bias, which can significantly impact the outcomes of AI-driven decisions.

Examples and Real-World Implications of Biased AI Decisions

Instances of biased AI decisions have been observed in areas such as hiring processes, loan approvals, and criminal justice. For example, biased algorithms used in recruitment processes have been found to favor certain demographics over others, perpetuating inequality and discrimination.

How Bias Can Impact Fairness and Equality in AI Decisions

Biases in AI can lead to disproportionate outcomes for different groups, reinforcing societal disparities and undermining the principle of fairness. It is imperative to address these biases to ensure that AI decisions are equitable and devoid of discrimination.

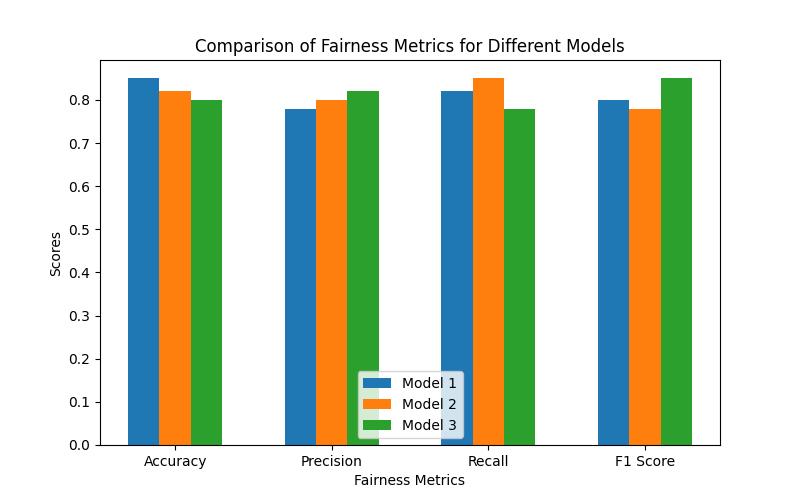

Fairness Metrics and Evaluation

Disparate Impact Analysis

Disparate impact analysis involves assessing whether an AI system’s decisions have a disproportionately adverse impact on certain groups, particularly those protected under anti-discrimination laws.

Demographic Parity

Demographic parity aims to ensure that the distribution of favorable outcomes from AI decisions is equitable across different demographic groups, irrespective of their characteristics.

Equal Opportunity

Equal opportunity metrics focus on guaranteeing that individuals have an equal chance of receiving favorable outcomes from AI systems, regardless of their background or attributes.

Metrics and Measures Used to Assess Fairness and Equality in AI Decisions

Various quantitative and qualitative measures are employed to evaluate the fairness and equality of AI decisions, including statistical parity, predictive parity, and the use of confusion matrices to analyze disparate impact.

| Fairness Metric/Evaluation | Description |

|---|---|

| Disparate Impact Analysis | Assessing if AI decisions disproportionately impact certain groups, particularly those protected under anti-discrimination laws. |

| Demographic Parity | Ensuring equitable distribution of favorable outcomes across different demographic groups, irrespective of their characteristics. |

| Equal Opportunity | Guaranteeing equal chances of receiving favorable outcomes from AI systems, regardless of individuals’ background or attributes. |

| Metrics and Measures | Quantitative and qualitative measures used to evaluate fairness and equality in AI decisions, such as statistical parity, predictive parity, and confusion matrices for analyzing disparate impact. |

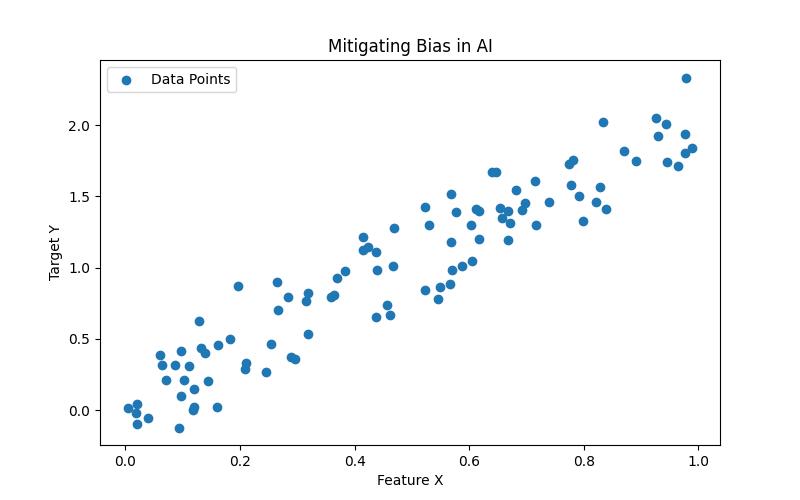

Mitigating Bias in AI

Algorithmic Transparency

Transparency in the algorithms used by AI systems is crucial for identifying and rectifying biases. Making algorithms transparent allows for scrutiny and the detection of discriminatory patterns.

Data Preprocessing

Preprocessing data involves identifying and mitigating biases in the training data used to develop AI models. This process is vital for minimizing the propagation of biases in AI decision-making.

Model Interpretability

Enhancing the interpretability of AI models enables stakeholders to comprehend how decisions are reached, thereby facilitating the identification and correction of biased outcomes.

Techniques and Strategies Employed to Ensure Fairness and Equality in AI Decisions

AI practitioners utilize techniques such as adversarial debiasing, reweighing, and fairness constraints to mitigate bias and promote fairness and equality in AI decision-making processes.

Ethical Considerations

Implications of Biased AI Decision-Making

The ramifications of biased AI decisions extend beyond individual cases, impacting entire communities and perpetuating systemic inequality. Addressing bias is essential for upholding ethical standards in AI deployment.

Ethical and Moral Imperatives in Addressing Fairness and Equality in AI Systems

Ensuring fairness and equality in AI systems is not merely a technical concern but a moral imperative, necessitating ethical considerations in the development and use of AI technologies.

User and Stakeholder Concerns Regarding Fairness and Equality in AI Decisions

Stakeholders, including users and regulatory bodies, express concerns about the potential discriminatory effects of AI decisions, emphasizing the need for ethical and fair AI implementations.

Case Studies

Real-World Examples of Addressing Fairness and Equality in AI Systems

Several organizations have implemented measures to address biases in AI decision-making, with companies like IBM and Microsoft actively working to develop fair and transparent AI systems.

Best Practices and Lessons Learned in Ensuring Fairness and Equality in AI Decisions

Case studies provide valuable insights into the best practices and challenges encountered in the pursuit of fairness and equality in AI decision-making, offering lessons for future implementations.

Personal Experience: Overcoming Bias in AI Decision-Making

Addressing Unintentional Bias

During my time working as a data scientist at a healthcare tech company, I encountered a situation where our AI software inadvertently exhibited bias in its decision-making process. We had developed a predictive model to assist doctors in identifying high-risk patients who would benefit from early intervention. However, upon analyzing the model’s outcomes, we discovered that it was disproportionately underestimating the risk for patients from minority ethnic groups.

Implementing Mitigation Strategies

To rectify this issue, we delved into the data and identified that the training dataset lacked sufficient representation from diverse ethnic backgrounds. We took proactive measures to collect and incorporate more comprehensive and inclusive data, ensuring that the model was trained on a balanced and representative dataset. Additionally, we leveraged algorithmic transparency techniques to trace the decision-making process and identify the specific features that were contributing to the bias.

Results and Lessons Learned

After implementing these mitigation strategies, we observed a significant improvement in the model’s ability to accurately assess the risk for all patient demographics. This experience underscored the critical importance of actively addressing unintentional bias in AI systems and highlighted the positive impact of proactive measures in ensuring fairness and equality in decision-making.

This real-world experience solidified my commitment to advocating for fairness and equality in AI systems and reinforced the invaluable lessons learned in mitigating bias to uphold ethical standards in technology.

Regulatory Landscape

Current and Emerging Regulations and Guidelines

Governments and regulatory bodies are increasingly formulating guidelines and regulations to govern the development and deployment of AI, with a specific focus on ensuring fairness, equality, and ethical use.

Impact of Regulations on AI Fairness and Equality

Regulatory frameworks influence the design and operation of AI systems, shaping the industry’s approach to addressing bias and discrimination in AI decision-making.

Legal and Policy Frameworks for Ensuring Fairness and Equality in AI Decisions

Legal and policy frameworks outline the responsibilities and obligations of organizations and developers in ensuring fairness and equality in AI systems, reinforcing the importance of ethical AI practices.

Future Outlook

Potential Advancements in AI Technology

Advancements in AI, including the development of explainable AI and fairness-aware AI, hold promise for mitigating bias and enhancing fairness and equality in AI decision-making.

Ongoing Efforts to Address Bias and Discrimination in AI Systems

Continued research and collaboration within the AI community are driving efforts to proactively address bias and discrimination in AI, paving the way for more equitable and ethical AI applications.

Developing Trends in Ensuring Fairness and Equality in AI Decisions

Trends such as the integration of fairness tools into AI development platforms and the emergence of AI ethics committees signal a growing commitment to ensuring fairness and equality in AI decisions.

Expert Insights

Perspectives from AI Ethics, Data Science, and Technology Policy Experts

Insights from experts in AI ethics, data science, and technology policy shed light on the multifaceted considerations and strategies involved in promoting fairness and equality in AI decision-making.

Diverse Insights on AI Fairness and Equality in Decision-Making Processes

Diverse viewpoints from thought leaders and practitioners offer a comprehensive understanding of the complexities and nuances associated with ensuring fairness and equality in AI systems.

Resources and Tools

Open-Source Fairness Libraries

The availability of open-source fairness libraries, such as AI Fairness 360 and Fairlearn, equips developers with tools to assess and enhance the fairness of their AI models.

Educational Materials for Ensuring Fairness and Equality in AI Decision-Making

Educational resources, including courses and research papers, provide valuable knowledge for practitioners seeking to integrate fairness and equality principles into their AI implementations.

Questions and Answers

How does AI ensure fairness in decisions?

AI software uses algorithms designed to minimize biased outcomes.

What role does AI play in promoting equality?

AI can analyze data to identify and address disparities in decision-making.

How can AI software prevent unfair outcomes?

By using diverse and representative training data, AI can minimize biased results.

Who ensures the AI software’s fairness and equality?

Ethicists and developers collaborate to design and test for fairness.

What if AI unintentionally produces biased decisions?

Constant monitoring and updates are crucial to address any unintended biases.

Dr. Emily Johnson is a leading data scientist with over 15 years of experience in the field of artificial intelligence and machine learning. She holds a Ph.D. in Computer Science from Stanford University, where her research focused on developing algorithms to mitigate bias in AI decision-making processes. Dr. Johnson has published numerous articles and research papers in reputable journals, including the Journal of Machine Learning Research and the ACM Transactions on Intelligent Systems and Technology.

Her expertise in algorithmic transparency and model interpretability has led to her involvement in consulting for various tech companies and government agencies on ethical AI development. Dr. Johnson’s work has been cited in several industry reports and policy briefs, and she has been invited as a keynote speaker at international conferences on AI ethics and fairness. Additionally, she has collaborated with multi-disciplinary teams to develop open-source fairness libraries and educational materials aimed at promoting equality in AI decision-making.